Residual DCN

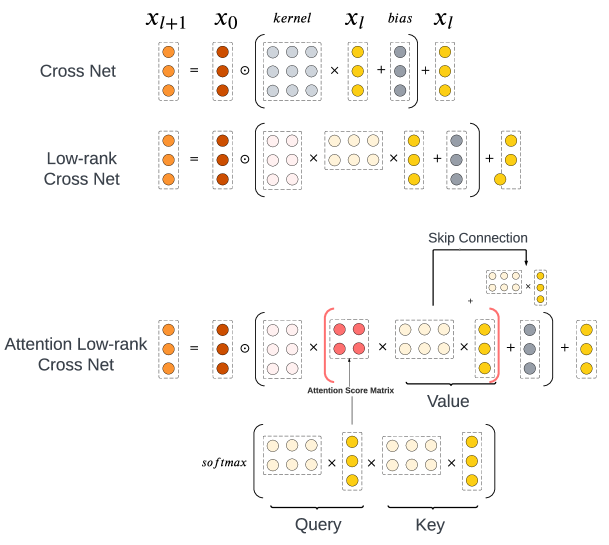

两层 [[DCNv2]] 提供足够的交叉,但是会显著增加训练和推理时间。采用两种策略提高效率

- replaced the weight matrix with two skinny matrices resembling a low-rank approximation 用两个类似低秩近似的瘦矩阵替换了权重矩阵 #card

- reduced the input feature dimension by replacing sparse one-hot features with embedding-table look-ups, resulting in nearly a 30% reduction. 通过用嵌入表查找替换稀疏的one-hot特征,减少了输入特征维度,结果几乎减少了30%。

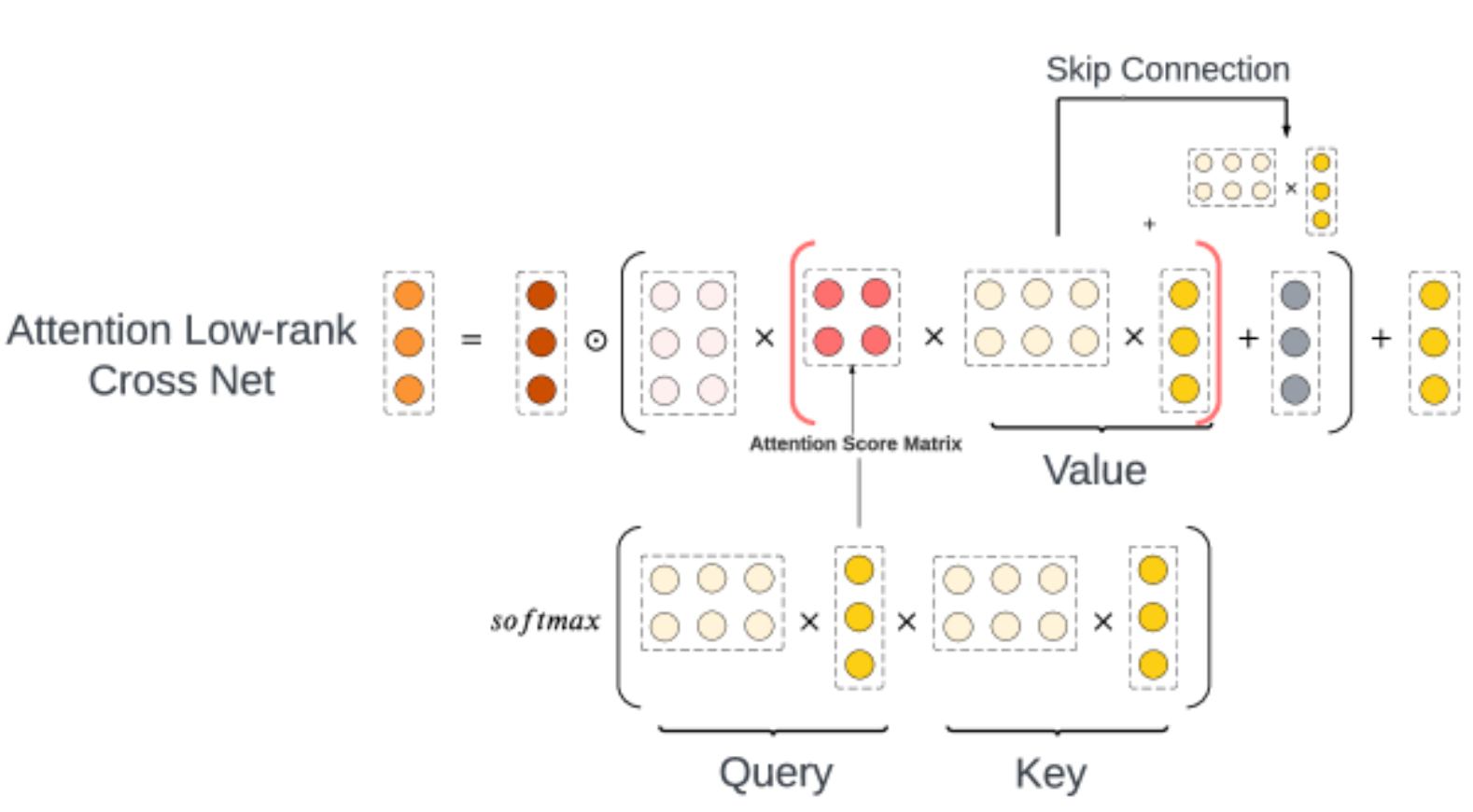

- low-rank cross net 中引入注意力机制 #card

通过 temperature 平衡学习到的特征交互复杂度

skip connection 和 fine-tuning the attention temperature 有助于学习更复杂的特征俩惯性,并保持稳定的训练。